A Glimpse into AI-Powered Song Generation with LyricWave

In the realm of artificial intelligence, we’re embarking on an exciting journey where music and technology unite. LyricWave, although a personal project, serves as our canvas to explore how AI and music creation can harmonize. Join me as we take a closer look at LyricWave’s proof-of-concept (POC) journey, a captivating combination of art and advanced technology.

LyricWave isn’t just another AI experiment. The project’s purpose is clear: to showcase how AI and music can create harmony. This Proof of Concept (POC) is a creative playground, demonstrating the potential of AI in song generation. While it may be a personal project, it’s a testament to the endless possibilities when artistry and technology intertwine.

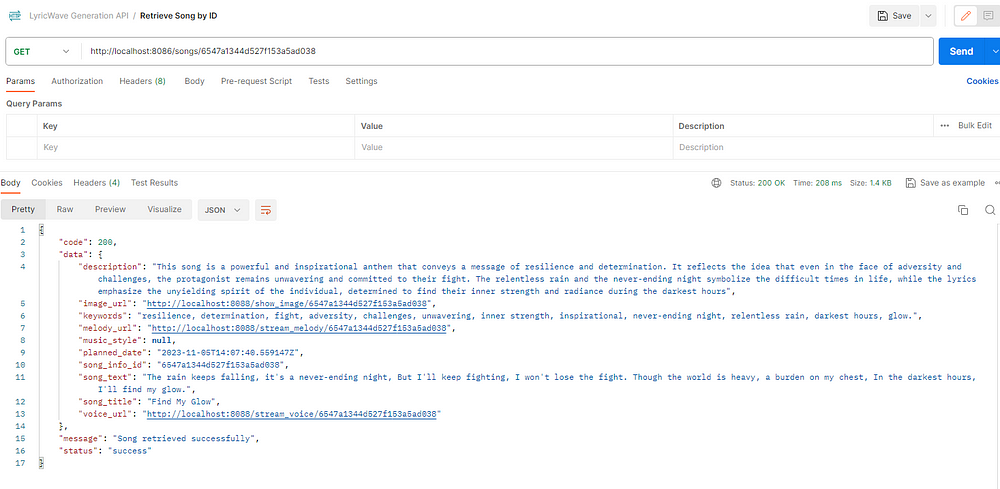

Let’s dive right into the magic that LyricWave creates. Here’s an example song, “Find my Glow,” freshly generated by the AI-powered platform. Take a moment to listen and let the music speak for itself:

If you find yourself captivated by the melody and the heartfelt lyrics, you’re not alone. LyricWave seamlessly combines the power of MusicGen, Sona AI Bark, and the Stable Diffusion Model to produce songs that resonate with emotion and artistry.

This is just a glimpse of what LyricWave can offer. If you’re curious to learn more about the technology behind this musical marvel and the fascinating world of AI-driven song generation, keep reading. We’re about to uncover the inner workings of LyricWave and the artistry that goes into each composition.

How LyricWave Works

LyricWave is a unique music generation platform that marries the creative art of songwriting with the advanced capabilities of artificial intelligence (AI). Here’s a more detailed breakdown of how it all comes together:

- Melody Magic: LyricWave’s journey begins with AI’s remarkable ability to compose melodies. AI analyzes the lyrical sentiment and crafts melodies that perfectly match the mood and emotion expressed in the lyrics. Think of it as your personal AI composer, creating melodies that evoke the right feelings.

- Voice Cloning: Once we have our melodies, AI doesn’t stop there. Sona.ai Bark’s cutting-edge voice cloning technology steps in. It provides expressive and lifelike synthetic vocals that breathe life into the lyrics. These synthetic vocals ensure that the songs created by LyricWave are beautifully sung and emotionally resonant.

- Harmonious Fusion: Now, the real magic happens. LyricWave seamlessly blends AI-generated melodies with the synthetic voices, resulting in complete MP4 tracks. These tracks offer a unique and immersive listening experience, capturing both the musical and lyrical essence in a way that only AI can. It’s a harmonious fusion of creativity and technology.

- Abstract Visuals: But LyricWave doesn’t stop at music and vocals. It goes a step further by generating captivating abstract visuals inspired by the lyrical content of the songs. These visuals provide a stunning visual representation of the song’s narrative, creating a multisensory experience for the audience.

- ElasticSearch-Powered Search: To make the music and lyrics accessible, LyricWave leverages ElasticSearch. It indexes the content of songs, enabling advanced searches based on keywords and phrases. This means you can easily discover and explore songs that match your specific interests and preferences.

- Seamless Integration with MongoDB: To ensure that all generated content is stored and managed efficiently, LyricWave seamlessly integrates with MongoDB. This NoSQL database stores comprehensive song details, including melodies, synthetic voices, abstract images, and metadata.

- Docker-Powered Workflow: The entire music generation pipeline is orchestrated using Docker Compose. This simplifies the deployment and management of the system, ensuring a smooth and efficient workflow.

- Apache Airflow DAG: The music generation process is modeled as a Directed Acyclic Graph (DAG) within Apache Airflow. This orchestration tool allows you to schedule, monitor, and manage music creation tasks, making the entire process user-friendly and efficient.

The result is a platform that is perfect for artists, songwriters, or anyone looking for a unique musical experience. LyricWave transforms words into beautiful melodies, and it’s all made possible by the power of AI.

In a nutshell, LyricWave leverages AI to compose melodies, create expressive vocals, merge them into complete songs, add stunning visuals, and manage the entire process seamlessly. It’s a technological marvel that brings music and AI together in perfect harmony.

Architecture Overview

In the LyricWave music generation platform, various architectural components work in harmony to deliver a seamless and powerful experience for both creators and music enthusiasts. Let’s explore the purpose and role of each element:

1. Apache Airflow:

- Purpose: Apache Airflow serves as the orchestrator of the entire music generation process. It schedules, monitors, and manages the execution of complex workflows represented as Directed Acyclic Graphs (DAGs). Airflow ensures that all the tasks, from generating melodies to creating final songs, are executed efficiently and in the desired sequence.

2. MongoDB:

- Purpose: MongoDB is used as the primary database for storing comprehensive song details, including metadata, lyrics, generated melodies, and more. It plays a central role in data management, enabling seamless storage, retrieval, and updates throughout the music creation process.

3. HaProxy:

- Purpose: HaProxy serves as a load balancer, distributing incoming requests to the nodes of the Song Generation API and the Streaming API. This ensures optimal resource utilization and load distribution for the various tasks involved in music generation.

4. Celery:

- Purpose: Celery is the distributed task queue system responsible for executing the computationally intensive tasks involved in generating music. It efficiently parallelizes the workload and utilizes multiple worker nodes to enhance processing speed and scalability.

5. Elasticsearch:

- Purpose: Elasticsearch is used to enhance the search capabilities within the platform. It indexes the content of songs, allowing users to perform advanced searches based on specific terms. This improves the discoverability of songs and enhances the overall user experience.

6. Song Generation API in Flask (Multiplexed):

- Purpose: The Song Generation API, powered by Flask, offers a convenient way to manage songs. Users can create new songs, initiate the execution of Airflow DAGs, delete songs, list existing songs, and perform searches using the Elasticsearch index. It serves as the gateway for users to interact with the LyricWave platform.

7. Streaming API in Flask:

- Purpose: The Streaming API in Flask enables users to stream and play the generated melodies, voice files, and final songs. This real-time audio streaming feature allows listeners to experience the music as it is being generated, enhancing engagement and entertainment.

8. MinIO:

- Purpose: MinIO is utilized as a high-performance object storage system for the platform. It is responsible for storing various files, including generated audio and images, and provides secure and efficient access to these resources.

Together, these architectural components create a dynamic and integrated ecosystem that powers LyricWave, transforming words into beautiful melodies and immersive music experiences. Whether you’re a creator or a listener, this architecture ensures that the magic of AI-powered music generation is at your fingertips.

The Power of Apache Airflow and AI Technologies

At the heart of LyricWave’s song generation pipeline lies Apache Airflow, a powerful open-source platform designed for orchestrating complex workflows. This DAG (Directed Acyclic Graph)-based system allows for the efficient scheduling, monitoring, and management of the entire music creation process.

Simplified Workflow Management

Apache Airflow simplifies the process of song generation by breaking it down into smaller, manageable tasks. These tasks are represented as nodes in a directed acyclic graph, allowing for a clear and organized workflow. Each task can be scheduled independently, making it easier to parallelize tasks for faster results.

Fault Tolerance and Error Handling

One of the key benefits of using Apache Airflow is its fault tolerance and error handling capabilities. If any part of the song generation process fails, Apache Airflow can automatically retry tasks, ensuring that no crucial step is missed. This guarantees that the song generation process remains robust and reliable.

Real-time Monitoring

LyricWave leverages Apache Airflow’s real-time monitoring features, making it easy to keep a close eye on the progress of song generation tasks. Users can access a user-friendly dashboard to track the status of ongoing tasks, making it simple to identify and address any issues that may arise during the process.

Why MusicGen, Suno-AI/Bark, and Stable Diffusion?

The choice of technology stack plays a critical role in LyricWave’s ability to generate captivating songs. Here’s a closer look at the AI-powered technologies selected for this project:

- MusicGen from AudioCraft: MusicGen is a simple yet controllable model for music generation. It uses a single-stage auto-regressive Transformer model, making it efficient and effective. What sets it apart is the ability to generate melodies without the need for self-supervised semantic representations. MusicGen was trained on an extensive dataset of 20,000 hours of licensed music, ensuring that the generated music is of high quality. This technology perfectly aligns with LyricWave’s goal of creating harmonious compositions.

- Suno-AI/Bark: Suno-AI Bark is a transformer-based text-to-audio model developed by Suno. It’s capable of producing highly realistic and multilingual speech, as well as other audio elements like music, background noise, and even nonverbal communications. The technology is incredibly versatile and adds expressive, lifelike synthetic vocals to LyricWave’s compositions, enhancing the overall auditory experience.

- Stable Diffusion Model: When it comes to creating stunning song cover images, the Stable Diffusion Model is the unsung hero. This latent text-to-image diffusion model is designed to generate photorealistic images based on textual input. It transforms the lyrics and emotions of each song into captivating visual representations. By harnessing the power of the Stable Diffusion Model, LyricWave adds an extra layer of depth to the music, providing a complete sensory experience.

Advantages of This Approach

LyricWave’s technology stack offers numerous advantages. By using MusicGen, Suno-AI/Bark, and the Stable Diffusion Model, LyricWave can create songs that resonate on a deeper level. These technologies provide:

- Quality and Realism: MusicGen ensures the melodies are not just harmonious but also of high quality. Suno-AI Bark’s expressive vocals add a realistic touch, enhancing the emotional connection with listeners.

- Visual Enhancement: The Stable Diffusion Model generates visually stunning song cover images, offering an artistic representation of the music’s emotions.

- Efficiency and Reliability: Apache Airflow’s DAG-based approach streamlines the song generation process, allowing for efficient task scheduling and real-time monitoring. This ensures the entire process is efficient and reliable.

A Harmonious Blend of Creativity and Technology

LyricWave’s approach represents a harmonious blend of creativity and technology. It takes the art of music creation and elevates it with AI-powered components, offering a unique and immersive musical experience.

Delving into the Creative Process: Unraveling the Anatomy of the Music Generation DAG

The provided file defines a DAG (Directed Acyclic Graph) named ‘music_generation_dag.’ A DAG is a graphical representation of a set of tasks with dependencies, where each task represents a step in a process, and the arrows between tasks indicate their execution order. In this case, the DAG is used to orchestrate and automate music generation using LyricWave.

The operators are connected in sequence using the >> notation, signifying that tasks must be executed in the specified order. This ensures that the melody generation precedes vocal generation, and so on, until the song cover is eventually generated.

This DAG creates an automated workflow that follows a specific sequence to generate a complete song. Each operator triggers a crucial stage in the music generation process and uses specific credentials and settings to access the necessary database and storage. The DAG ensures that all these tasks are executed in the correct order without conflicts.

Now, let’s dive into each operator within the DAG:

Harmonious Creations: The Melody Generation Operator

This operator is responsible for generating the song’s melody. It leverages provided information, such as credentials to access the database and MinIO storage (an open-source object storage server), to perform this task.

The GenerateMelodyOperator is a custom Airflow operator designed to generate musical melodies based on provided text input using the AudioCraft by Facebook model. It connects to MongoDB and MinIO for data storage and uses transformers from the Hugging Face library for the generation process. The execute method orchestrates the entire process, generating melodies, storing them, and updating MongoDB documents. The operator is a crucial part of the music generation DAG within LyricWave.

This operator plays a pivotal role in LyricWave, a project focused on AI-driven song generation.

Key Components:

- Pre-Trained Model: The operator leverages a pre-trained model provided by Facebook’s AudioCraft. This model is capable of encoding text input into a musical melody. The encoding process transforms the text into a format that can be used to generate audio.

- Data Storage: The generated musical melodies are stored as WAV audio files. The operator connects to a MinIO server, a high-performance object storage system, to store these audio files. It relies on MinIO’s endpoint, access key, and secret key for authentication and access to the storage.

- Metadata Management: Alongside audio storage, the operator manages metadata related to the generated melodies. It integrates with a MongoDB database, storing information about the generated melodies, such as their titles and file paths. This metadata is valuable for organizing and cataloging the generated content.

- Error Handling: The operator is equipped to handle exceptions and errors that might occur during the melody generation process. If any errors are encountered, they are logged appropriately, ensuring that issues are identified and addressed.

- Integration with Hugging Face: The operator makes extensive use of the Hugging Face Transformers library. Hugging Face is a prominent platform for natural language processing and machine learning models, offering a rich ecosystem of pre-trained models for a wide range of tasks.

In this case, the operator utilizes the Transformers library to interact with the Facebook AudioCraft model. Here’s how it works:

- AutoProcessor: The operator initializes an AutoProcessor from the Transformers library. This processor plays a crucial role in encoding the provided song text into a format that can be fed into the model. It ensures that the text input is correctly prepared for melody generation.

- MusicgenForConditionalGeneration: The operator creates an instance of the MusicgenForConditionalGeneration model provided by Hugging Face. This model is fine-tuned for generating musical content based on encoded text input.

- Text-to-Melody Generation: Using the provided text input and the model, the operator generates a musical melody. The model processes the encoded text and produces audio data that represents the melody.

- WAV Audio File: The resulting audio data is saved as a WAV audio file. This file is uniquely named based on the song’s unique identifier (

song_id). The operator employs the SciPy library to write the audio data to a WAV file with the specified sampling rate.

DAG Integration: The GenerateMelodyOperator is designed to be part of a larger Directed Acyclic Graph (DAG) within Apache Airflow. This DAG orchestrates the entire music generation process, and this operator is one of the key steps in the workflow.

Vocal Synthesis Operator: Breathing Life into Lyrics

The next operator in the flow is GenerateVoiceOperator, which generates the song's vocals. Just like GenerateMelodyOperator, it is configured with the same credentials and parameters to access the database and MinIO.

The GenerateVoiceOperator is a custom Airflow operator responsible for generating vocal performances from song lyrics. It employs the 'suno/bark' model from the Transformers library to create expressive vocals. Here's how it works:

- Input: The operator takes the text of a song as its input. The song text should be formatted to start and end with the musical note symbol “♪.” This symbol indicates the beginning and end of the musical performance and helps guide the model’s voice generation process.

- Processing: The operator utilizes the ‘suno/bark’ model from the Transformers library. It first adds the “♪” symbols to the provided song text to create a complete musical context.

- Voice Generation: Using the musical context, the model generates a voice performance that complements the lyrics. The resulting voice is expressive and lifelike.

- Audio File: The generated voice is saved as a WAV file. The file name is based on the song’s unique identifier, making it easy to reference.

- Storage: The operator stores the generated voice file in a MinIO server, which acts as a storage system for speech files.

- Update MongoDB: The operator updates a MongoDB document associated with the song. It records the name of the generated voice file, marks the song status as “voice_generated,” and records the timestamp of the voice generation.

This operator plays a crucial role in transforming song lyrics into vocal performances, adding an extra layer of emotional depth to the music. It’s a key component in the creative process of LyricWave.

Harmonious Fusion: The GenerateSongOperator

Once both the melody and vocals are generated, the GenerateSongOperator comes into play. Its role is to combine these two tracks to create the complete song. It also uses the same credentials and settings.

Its primary functions are as follows:

- Input and Retrieval: The operator starts by retrieving a unique identifier for the song (referred to as

song_id) from the preceding task using XCom. This identifier is crucial for connecting various components of the song. - Database Interaction: It connects to a MongoDB database to fetch relevant information about the song. This includes the file names for the melody and voice audio components that were generated earlier in the process.

- MinIO Access: To access the melody and voice audio files, the operator establishes a connection with a MinIO server, which serves as a storage system for these files.

- Audio Combination: The heart of the operation lies in combining the melody and voice audio. It loads both audio files and performs several audio processing tasks:

- Normalization: Attempts to normalize the voice audio to enhance audio quality.

- Fading: Applies fade-in and fade-out effects to the voice audio to achieve smoother transitions.

- Amplification: Adjusts the volume of both the melody and voice audio components.

- Resampling: Ensures that both audio components have the same sample rate and channels for compatibility.

- Duration Matching: Makes sure that both audio components have the same duration.

5. Combination: The operator combines the melody and voice audio to create a harmonious and complete song. The resulting audio is stored in a format suitable for final playback.

6. MinIO Storage: The generated combined audio is stored in the MinIO server for easy access and retrieval.

7. Database Update: The operator updates the MongoDB document associated with the song, recording the name of the generated final song file, marking the song’s status as “final_song_generated,” and timestamping the completion.

In essence, the GenerateSongOperator plays a pivotal role in merging the melodic and vocal elements to create a fully developed song, making it a vital component in the LyricWave music generation process.

Visual Elegance: The GenerateSongCoverOperator

The GenerateSongCoverOperator is a specialized Airflow operator designed for generating visually captivating cover images for songs using the Stable Diffusion model.

Here's how it works:

- Input and Retrieval: The operator starts by retrieving a unique identifier for the song (referred to as

song_id) from the preceding task using XCom. This identifier is crucial for linking the song text with its cover image. - Database Interaction: It connects to a MongoDB database to fetch the song’s text description. This description is the basis for creating the cover image.

- Image Generation: The core of the operation involves using the Stable Diffusion model to generate a song cover image based on the provided song text. The model translates the textual description into a visually appealing image that represents the essence of the song.

- Image Storage: Once the image is generated, it’s saved as a temporary file with a

.jpgextension. - MinIO Access: The operator establishes a connection with a MinIO server to store the generated song cover image. MinIO acts as a repository for images and makes them easily accessible.

- Database Update: The operator updates the MongoDB document associated with the song, recording the name of the generated song cover image, marking the song’s status as “image_cover_generated,” and timestamping the completion.

In summary, the GenerateSongCoverOperator adds a visual element to songs by creating cover images that encapsulate the essence of the music. It's a crucial step in enhancing the overall appeal of songs generated by LyricWave.

Seamless Data Integration: The IndexToElasticsearchOperator

The IndexToElasticsearchOperator is an essential Airflow operator that facilitates the process of indexing data into an Elasticsearch database.

Its primary functions are as follows:

- Configuration: The operator is configured with the connection details for the Elasticsearch host and the specific index where the data will be stored.

- Input Retrieval: It retrieves the song identifier (

song_id) from the configuration passed to the DAG via the execution context. This identifier serves as a reference to the song's data. - MongoDB Interaction: The operator connects to a MongoDB database to retrieve the text of the song associated with the

song_id. This text is what will be indexed in Elasticsearch. - Indexing: The core function of the operator is to index the song text into an Elasticsearch database. It constructs a document that includes the

song_idand the song text and uses the Elasticsearch Python client to perform the indexing. - Database Update: After successful indexing, the operator updates the MongoDB document associated with the song, marking its status as “song_indexed” and timestamping the indexing operation.

In summary, the IndexToElasticsearchOperator streamlines the process of storing song data in Elasticsearch, making it easily searchable and accessible. It's a critical step in ensuring that the data is available for advanced search and retrieval functionalities in the LyricWave application.

LyricWave Platform Features: Empowering Music Creation with Celery Flower, Flask API, Streaming, and MongoDB Logs

LyricWave offers a comprehensive set of tools and features to enhance the music generation experience. These features include:

- Celery Flower for Workload Monitoring: To keep an eye on the activity and workload of workers, LyricWave employs Celery Flower. This powerful tool provides real-time insights into the status and performance of the tasks, allowing for efficient monitoring and management of the music generation process.

- Flask-Based Song Generation API: LyricWave provides a user-friendly API, known as the Song Generation API, built with Flask. This API offers various functionalities, including:

Creating New Songs: You can generate new songs by triggering the execution of the DAG.

Deletion of Songs: Remove songs that are no longer needed.

List of Songs: Obtain a list of all available songs.

Search Songs: Utilize the Elasticsearch index to perform advanced searches, making it easy to find specific songs based on criteria such as lyrics or song text.

- Streaming API: LyricWave’s Streaming API allows you to stream melodies, voice audio, and the final generated songs. This feature enhances the listening experience by providing on-the-fly access to the music components.

- MongoDB Logs: For comprehensive tracking and debugging, LyricWave stores logs in a MongoDB collection. These logs contain detailed information about the execution of the DAG, making it easier to identify and troubleshoot errors and failures.

With these tools and features, LyricWave aims to provide a seamless and enjoyable music generation experience. Whether you’re a music enthusiast or an artist, LyricWave’s platform is designed to cater to your creative needs.

Before You Go, You Must Hear This

As we conclude this journey through the creative wonders of LyricWave, I invite you to stay a little longer. Before you go, there’s something special you simply must experience. I’ve saved the best for last — a final showcase of AI-generated songs that will leave you in awe. These songs are not just a testament to technology’s potential; they are a heartfelt expression of art, emotions, and innovation.

Before you close the door on this world of AI-assisted music creation, give yourself the gift of listening to these melodies, lyrics, and harmonious tracks. It’s a reminder that creativity knows no bounds when paired with cutting-edge technology.

So, before you go, let these songs touch your heart and inspire your imagination. You’ll find them on SoundCloud, a testament to what LyricWave can achieve. Enjoy, and thank you for embarking on this musical journey with me.

“Find my Glow”

Song Lyrics: ♪ The rain keeps falling, it’s a never-ending night, But I’ll keep fighting, I won’t lose the fight. Though the world is heavy, a burden on my chest, In the darkest hours, I’ll find my glow. ♪

“Rise and Shine”

Song Lyrics: ♪ Rise and shine, you’re a star so bright, With your spirit strong, take flight, In your eyes, a world of possibility, Embrace the day, and set your spirit free. ♪

“Fading Echoes”

Song Lyrics: ♪ I’m lost in the shadows of our yesterdays, Fading echoes, in a melancholy haze. Your absence lingers, in the spaces between, In this quiet solitude, I’m forever unseen. ♪

“Fading Memories”

Song Lyrics: ♪ I’m drowning in these fading memories, Lost in time,

lost at sea. Your ghost still haunts my heart, it seems,

In this endless night, I’m lost in dreams. ♪

“Broken Promises”

Song Lyrics: ♪ Broken promises, shattered dreams, In the silence, nothing’s as it seems. Our love, once strong, now torn apart, In the ruins of our world, I search for a fresh start. ♪

“Wounds of Time”

Song Lyrics: ♪ Wounds of time, they run so deep, In the dark, my secrets I keep. The echoes of the past, they won’t subside, In the shadows of my heart, I silently hide. ♪

This is it. I have really enjoyed developing and documenting this little project. Thanks for reading it. I hope this is the first of many. Special thanks to the open-source community and the contributors who have made this project possible.

If you are interested in the complete code, here is the link to the public repository: